Batch Statements with Spring Boot and Hibernate

Enable batch inserts and updates in Hibernate to improve write performance in PostgreSQL and CockroachDB

In this post we will look at how enabling batch statements will improve write performance when using Spring Data JPA and Hibernate against both PostgreSQL and CockroachDB.

Example Code

The code examples in this post are available in Github.

Introduction

Remote calls are always expensive, so reducing the number of roundtrips between the application and the database will improve both read and write performance significantly.

One technique for the read side is caching (with certain caveats). The first level session cache in Hibernate already does that out of the box. The second level cache can also be used to further enhance caching across conversational sessions using Ehcache or across VM instances using a distributed cache like Hazelcast.

Another technique for the write side is to group statements together using batch INSERT and UPDATE statements, and that's what this post is about.

Batching is disabled by default in Hibernate. Enabling it is a low-hanging fruit to improve write performance in most cases. There's just a few configuration settings needed to enable batching and you usually don't need to change any code.

Demo Application

To demonstrate this we are going to use a simple e-commerce demo application. The code is available in Github and its a working example driven by a few integration tests.

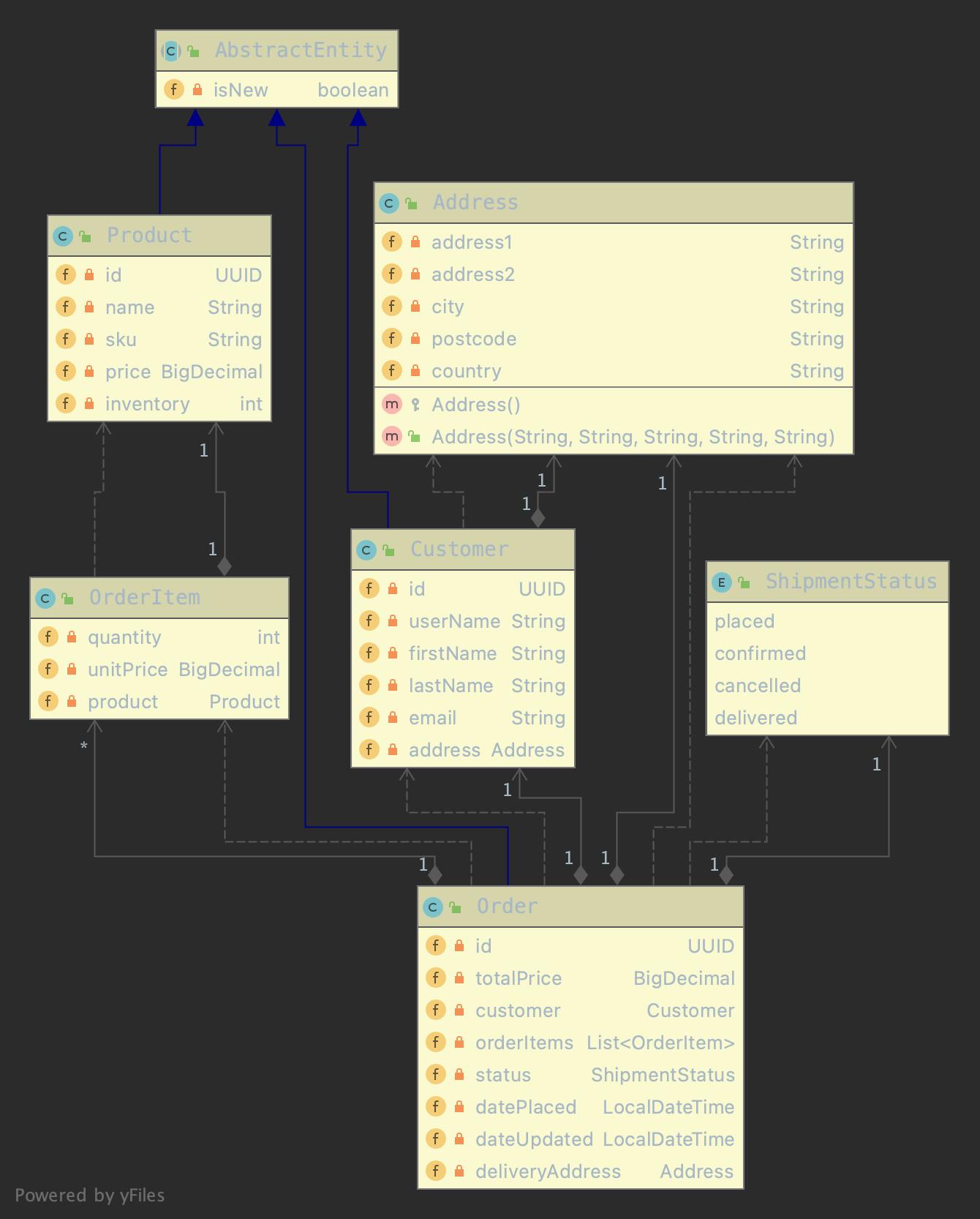

Entity Model

It's a classic example of a basic e-commerce application with products, purchase orders, order items and customers. The entity model is large enough to highlight how Hibernate works under the hood in terms of ordering inserts, updates and applying batching once enabled.

Let's also take a quick look at the Order entity to get the picture:

@Entity

@Table(name = "orders")

@TypeDef(name = "custom_enum", typeClass = ShipmentStatusEnumType.class)

public class Order extends AbstractEntity<UUID> {

...

@ElementCollection(fetch = FetchType.LAZY)

@JoinColumn(name = "order_id", nullable = false)

@JoinTable(name = "order_items", joinColumns = @JoinColumn(name = "order_id"))

@OrderColumn(name = "item_pos")

@Fetch(FetchMode.SUBSELECT)

private List<OrderItem> orderItems = new ArrayList<>();

...

}

One highlight is that it uses the @ElementCollection mapping for order items. The reason being that OrderItem is modelled as a join table with attributes rather than a separate entity with a surrogate ID. It's therefore an embeddable type, meaning its lifecycle is entirely controlled by the owning Order entity.

The primary index of the order_items join table will therefore be a composite primary key of order_id and order_pos which is just the line number in the purchase order. This is just a modelling detail and not much related to batching, but we will use it for batching multi-entity inserts and updates. It could just as well have been modelled as a separate entity with a bi-directional @OneToMany mapping.

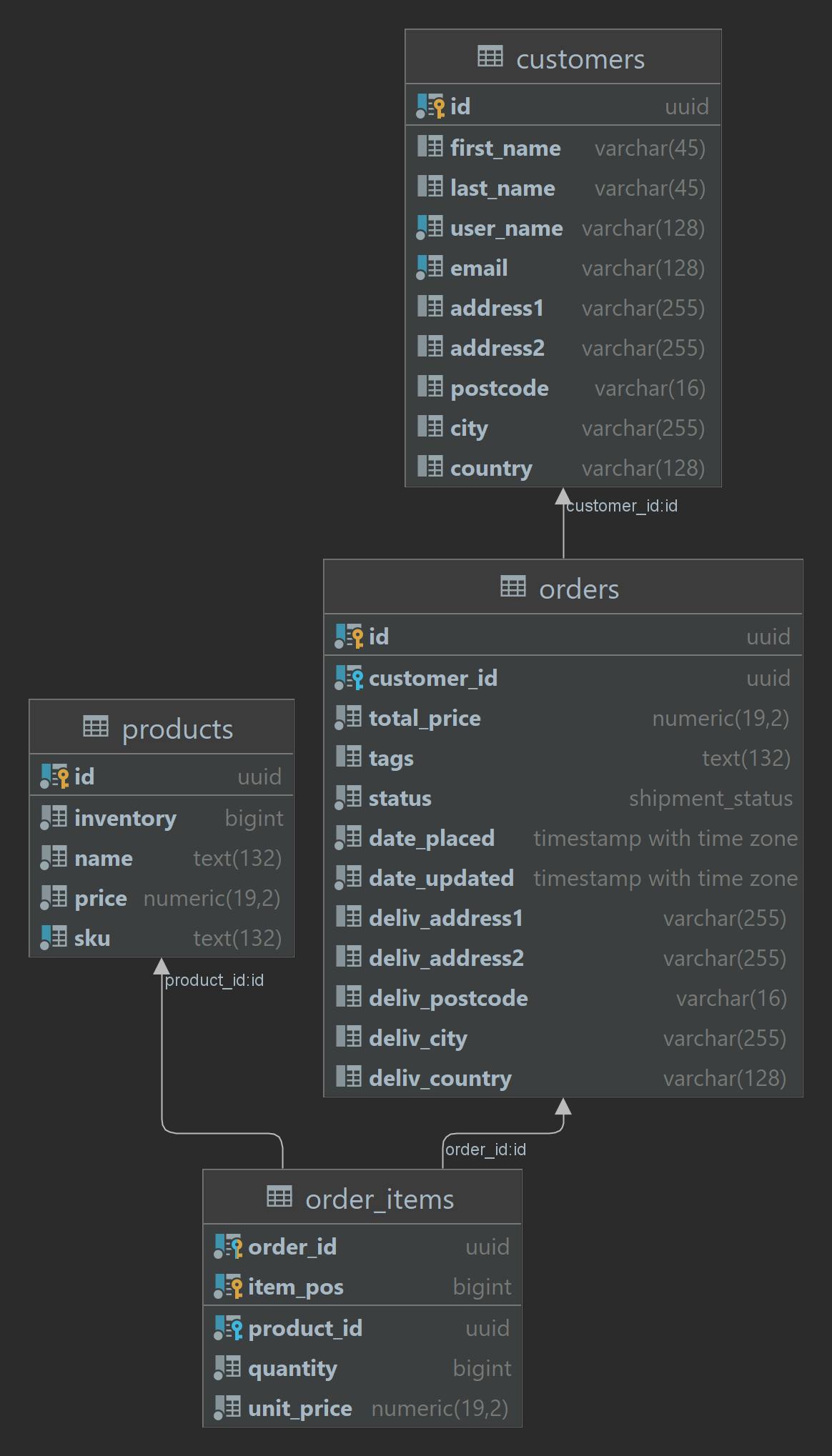

Table Schema

Let's throw in the entity-relation model as well for completion:

Order Creation

The main demo part is focusing on order creation. It begins by selecting a single customer and a few products, then creates an order and one order item for each product. In total there are a few initial queries, one insert per order and one insert per order item.

public void newOrders(int orderCount, int productCount, Consumer<Order> callback) {

List<Customer> allCustomers = customerRepository.findAll();

List<Product> allProducts = productRepository.findAll();

IntStream.rangeClosed(1, orderCount).forEach(value -> {

Order.Builder ob = Order.builder().withCustomer(RandomData.selectRandom(allCustomers));

RandomData.selectRandomUnique(allProducts, productCount).forEach(product ->

ob.andOrderItem()

.withProduct(product)

.withQuantity(5)

.then());

callback.accept(ob.build());

});

}

The callback in this case is a functional interface with side-effects, which is just to write the transient orders straight to the database. By default the orders are persisted by Hibernate passing single statements in one logical transaction. Using batching however, we can see that both the order's and order items are batched together.

The next part is to UPDATE the order status with and without batching. This could be done in a bulk operation but for the purpose of demonstration is done using read-modify-write workflow.

The test code that creates the orders:

@org.junit.jupiter.api.Order(1)

@ParameterizedTest

@ValueSource(ints = {10, 250, 500, 1500})

public void whenCreatingSingletonOrders_thenSucceed(int numOrders) {

Timer.timeExecution("placeOrder_singleton",

() -> testDoubles.newOrders(numOrders, 4, order -> orderService.placeOrder(order)));

}

@org.junit.jupiter.api.Order(2)

@ParameterizedTest

@ValueSource(ints = {10, 250, 500, 1500})

public void whenCreatingBatchOrders_thenSucceed(int numOrders) {

final List<Order> orders = new ArrayList<>();

testDoubles.newOrders(numOrders, 4, orders::add);

Timer.timeExecution("placeOrders_batch",

() -> orderService.placeOrders(orders));

}

The @ParameterizedTest and @ValueSource(ints = {10, 250, 500, 1500}) annotations means the test method will be called multiple times with different order counts.

The singleton approach does batching as well (when enabled) but its scoped to one order and its items in each transaction. The second approach passes a bunch of orders with items to the transactional service method. Both methods use batching, but the latter one bit more extensively since it writes multiple orders in each transaction.

The OrderService itself is simple and just acts as a transaction boundary and delegates to the Spring Data JPA repository:

@Service

public class OrderService {

@Autowired

private OrderRepository orderRepository;

@TransactionBoundary

public void placeOrder(Order order) {

Assert.isTrue(!TransactionSynchronizationManager.isCurrentTransactionReadOnly(), "Read-only");

Assert.isTrue(TransactionSynchronizationManager.isActualTransactionActive(), "No tx");

orderRepository.save(order);

}

@TransactionBoundary

public void placeOrders(Collection<Order> orders) {

Assert.isTrue(!TransactionSynchronizationManager.isCurrentTransactionReadOnly(), "Read-only");

Assert.isTrue(TransactionSynchronizationManager.isActualTransactionActive(), "No tx");

orderRepository.saveAll(orders);

}

@TransactionBoundary

public void updateOrderStatus(Iterable<UUID> ids, ShipmentStatus status) {

Assert.isTrue(!TransactionSynchronizationManager.isCurrentTransactionReadOnly(), "Read-only");

Assert.isTrue(TransactionSynchronizationManager.isActualTransactionActive(), "No tx");

orderRepository.findAllById(ids).forEach(order -> order.setStatus(status));

}

@TransactionBoundary

public List<UUID> findOrderIdsWithStatus(ShipmentStatus status) {

return orderRepository.findIdsByShipmentStatus(status);

}

}

Batching Disabled

As initially mentioned, batching is disabled by default in Hibernate. This is demonstrated by the following log snippet when running the order creation test:

10:24:45.057 TRACE [i.r.s.a.aspect.RetryableAspect] Calling transaction boundary OrderService.placeOrder(..): attempt 1 of 10

10:24:45.057 TRACE [i.r.s.a.aspect.TransactionHintsAspect] Transaction attributes applied for OrderService.placeOrder(..): @io.roach.spring.annotations.TransactionBoundary(followerReadStaleness=(exact), maxBackoff=30000, readOnly=false, priority=normal, timeout=-1, timeTravel=(none), followerRead=false, retryAttempts=10, applicationName=(none), vectorize=auto)

10:24:45.057 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["SET application_name=?"], "params":[["UNNAMED"]]}

10:24:45.089 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["insert into orders (customer_id, date_placed, date_updated, deliv_address1, deliv_address2, deliv_city, deliv_country, deliv_postcode, status, total_price, id) values (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)"], "params":[["6a593014-dbc4-4927-a1f0-3f0d60c1eee8","2022-03-16 10:24:45.073","2022-03-16 10:24:45.073","Ag5aq5tbqY1_CCxlJIgE","dy4KbOJZU4_VIU9Hsv8r","Paris","Australia","63922","placed","5094.20","987a0cd3-d1c4-491d-96de-b2e08e748642"]]}

10:24:45.104 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":15, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values (?, ?, ?, ?, ?)"], "params":[["987a0cd3-d1c4-491d-96de-b2e08e748642","0","f0d7ca0a-0d04-4c84-bfa2-0cba4cc1f4a1","5","25.37"]]}

10:24:45.104 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values (?, ?, ?, ?, ?)"], "params":[["987a0cd3-d1c4-491d-96de-b2e08e748642","1","d13a528c-c2c4-4626-b67e-2a03b98145ed","5","338.72"]]}

10:24:45.104 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values (?, ?, ?, ?, ?)"], "params":[["987a0cd3-d1c4-491d-96de-b2e08e748642","2","bf05bb28-5b58-4ca9-a566-6cb73d98654b","5","362.63"]]}

10:24:45.104 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values (?, ?, ?, ?, ?)"], "params":[["987a0cd3-d1c4-491d-96de-b2e08e748642","3","e0b22301-8750-4ea2-bcf3-d1c9bbe685b1","5","292.12"]]}

Take note on "batch": false and "batchSize": 0. Not ideal for write performance, so let's enable batching next.

Batching Enabled

To enable batching, just set the hibernate.jdbc.batch_size hibernate property to a positive value:

setProperty(Environment.STATEMENT_BATCH_SIZE, 24);

Re-running the test and the app-level log output is now as follows:

10:38:51.590 TRACE [i.r.s.a.aspect.RetryableAspect] Calling transaction boundary OrderService.placeOrder(..): attempt 1 of 10

10:38:51.590 TRACE [i.r.s.a.aspect.TransactionHintsAspect] Transaction attributes applied for OrderService.placeOrder(..): @io.roach.spring.annotations.TransactionBoundary(followerReadStaleness=(exact), maxBackoff=30000, readOnly=false, priority=normal, timeout=-1, followerRead=false, timeTravel=(none), retryAttempts=10, applicationName=(none), vectorize=auto)

10:38:51.590 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["SET application_name=?"], "params":[["UNNAMED"]]}

10:38:51.621 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":15, "success":true, "type":"Prepared", "batch":true, "querySize":1, "batchSize":1, "query":["insert into orders (customer_id, date_placed, date_updated, deliv_address1, deliv_address2, deliv_city, deliv_country, deliv_postcode, status, total_price, id) values (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)"], "params":[["b816d5c2-2cb6-4ee2-b205-7b36539a6130","2022-03-16 10:38:51.606","2022-03-16 10:38:51.606","V84AvmSRnM4zlhCPd7Je","-nvY5d7Q4geuvLPiI2rL","Hong kong","Singapore","72723","placed","4735.40","889280ef-7e0a-4d6a-8123-e98fa972851c"]]}

10:38:51.621 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":true, "querySize":1, "batchSize":4, "query":["insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values (?, ?, ?, ?, ?)"], "params":[["889280ef-7e0a-4d6a-8123-e98fa972851c","0","86018188-4dc4-4d47-977f-1e8943a05dc7","5","257.46"],["889280ef-7e0a-4d6a-8123-e98fa972851c","1","34437ac1-3531-4973-afe3-fd483bbc5ae6","5","433.77"],["889280ef-7e0a-4d6a-8123-e98fa972851c","2","fa5ce088-023e-4fb4-9cd9-634dc7d0295f","5","203.71"],["889280ef-7e0a-4d6a-8123-e98fa972851c","3","4c913ec6-ac36-4413-8ae1-26d2ffaddb67","5","52.14"]]}

This is however just the client side log, produced through TTDDYY that wraps a logging proxy around the DataSource. Since we lean on the mantra trust but verify, we want to make really sure that the statements are actually batched over the wire to the server side (DB) and to do that we need to enable database trace logging.

In CockroachDB, here's how:

set cluster setting sql.trace.log_statement_execute=true;

If you are running a cluster of CockroachDB nodes, one easy way to observe the SQL activity in the logs is to use the debug merge-logs command and tail the output:

tail -f cockroach debug merge-logs datafiles/*/logs/*

Lets check the output:

I220316 09:42:26.519344 4223141 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:51126›,hostnossl,user=root] 277883 ={"Timestamp":1647423746517226492,"EventType":"query_execute","Statement":"INSERT INTO ‹\"\"›.‹\"\"›.‹orders›(‹customer_id›, ‹date_placed›, ‹date_updated›, ‹deliv_address1›, ‹deliv_address2›, ‹deliv_city›, ‹deliv_country›, ‹deliv_postcode›, ‹status›, ‹total_price›, ‹id›) VALUES ($1, $2, $3, $4, $5, $6, $7, $8, $9, $10, $11)","Tag":"INSERT","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'4552dc4c-6d9f-4931-9a2c-8b73a52bcde5'›","‹'2022-03-16 10:41:31.945+01:00'›","‹'2022-03-16 10:41:31.945+01:00'›","‹'efCTlCvQRh_EP0X0_DBo'›","‹'1KmcxNQhR3bFfpdrxJdT'›","‹'Boston'›","‹'United States'›","‹'57991'›","‹'placed'›","‹5637.20›","‹'552c37c4-a75b-4678-9b4a-8e790d462b5c'›"],"ExecMode":"exec","NumRows":1,"Age":1.090778,"TxnCounter":146}

I220316 09:42:26.531048 4223141 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:51126›,hostnossl,user=root] 277884 ={"Timestamp":1647423746517226492,"EventType":"query_execute","Statement":"INSERT INTO ‹\"\"›.‹\"\"›.‹order_items›(‹order_id›, ‹item_pos›, ‹product_id›, ‹quantity›, ‹unit_price›) VALUES ($1, $2, $3, $4, $5)","Tag":"INSERT","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'552c37c4-a75b-4678-9b4a-8e790d462b5c'›","‹0›","‹'ac210e02-45d6-4e66-b8e6-b59ef977801a'›","‹5›","‹282.93›"],"ExecMode":"exec","NumRows":1,"Age":11.100739,"TxnCounter":146}

I220316 09:42:26.532069 4223141 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:51126›,hostnossl,user=root] 277885 ={"Timestamp":1647423746517226492,"EventType":"query_execute","Statement":"INSERT INTO ‹\"\"›.‹\"\"›.‹order_items›(‹order_id›, ‹item_pos›, ‹product_id›, ‹quantity›, ‹unit_price›) VALUES ($1, $2, $3, $4, $5)","Tag":"INSERT","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'552c37c4-a75b-4678-9b4a-8e790d462b5c'›","‹1›","‹'7b2d8fd0-b249-485a-9689-0f092923cf49'›","‹5›","‹410.20›"],"ExecMode":"exec","NumRows":1,"Age":12.125457,"TxnCounter":146}

I220316 09:42:26.532977 4223141 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:51126›,hostnossl,user=root] 277886 ={"Timestamp":1647423746517226492,"EventType":"query_execute","Statement":"INSERT INTO ‹\"\"›.‹\"\"›.‹order_items›(‹order_id›, ‹item_pos›, ‹product_id›, ‹quantity›, ‹unit_price›) VALUES ($1, $2, $3, $4, $5)","Tag":"INSERT","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'552c37c4-a75b-4678-9b4a-8e790d462b5c'›","‹2›","‹'356d6294-eee8-45bd-83b0-f87d25d56448'›","‹5›","‹293.57›"],"ExecMode":"exec","NumRows":1,"Age":13.023089,"TxnCounter":146}

I220316 09:42:26.533833 4223141 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:51126›,hostnossl,user=root] 277887 ={"Timestamp":1647423746517226492,"EventType":"query_execute","Statement":"INSERT INTO ‹\"\"›.‹\"\"›.‹order_items›(‹order_id›, ‹item_pos›, ‹product_id›, ‹quantity›, ‹unit_price›) VALUES ($1, $2, $3, $4, $5)","Tag":"INSERT","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'552c37c4-a75b-4678-9b4a-8e790d462b5c'›","‹3›","‹'c27b565c-10ac-4b4d-9e83-6ed80986c52a'›","‹5›","‹140.74›"],"ExecMode":"exec","NumRows":1,"Age":13.886518,"TxnCounter":146}

Wait a minute, this is not really what we expected!? All four order_item rows are still inserted separately rather than in a single batch.

The reason is that we have omitted one key parameter, which is to enable rewriting INSERTs to multi-value INSERTs. This is done with the reWriteBatchedInserts connection parameter:

ds.addDataSourceProperty("reWriteBatchedInserts", true);

(you can also pass it as a JDBC URL parameter)

This will change batch inserts from insert into order_item (col1, col2, col3) values (1,2,3) into insert into order_item (col1, col2, col3) values (1,2,3), (4,5,6).

Let's see if that's the actual case after setting the parameter:

I220316 09:51:16.523475 4242163 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:56360›,hostnossl,user=root] 279905 ={"Timestamp":1647424276521272393,"EventType":"query_execute","Statement":"INSERT INTO ‹\"\"›.‹\"\"›.‹orders›(‹customer_id›, ‹date_placed›, ‹date_updated›, ‹deliv_address1›, ‹deliv_address2›, ‹deliv_city›, ‹deliv_country›, ‹deliv_postcode›, ‹status›, ‹total_price›, ‹id›) VALUES ($1, $2, $3, $4, $5, $6, $7, $8, $9, $10, $11)","Tag":"INSERT","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'853cec70-2ee4-4759-94ba-db6aced88018'›","‹'2022-03-16 10:50:21.96+01:00'›","‹'2022-03-16 10:50:21.96+01:00'›","‹'OkAcKMD0qti2Uu1sYIJV'›","‹'wDGAoR-M9xkIIwZE2QQ9'›","‹'Hong kong'›","‹'Malta'›","‹'33687'›","‹'placed'›","‹6264.25›","‹'3a6a16cc-e49b-4854-bb43-aed98e48cf6d'›"],"ExecMode":"exec","NumRows":1,"Age":1.14776,"TxnCounter":122}

I220316 09:51:16.529996 4242163 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:56360›,hostnossl,user=root] 279906 ={"Timestamp":1647424276521272393,"EventType":"query_execute","Statement":"INSERT INTO ‹\"\"›.‹\"\"›.‹order_items›(‹order_id›, ‹item_pos›, ‹product_id›, ‹quantity›, ‹unit_price›) VALUES ($1, $2, $3, $4, $5), ($6, $7, $8, $9, $10), ($11, $12, $13, $14, $15), ($16, $17, $18, $19, $20)","Tag":"INSERT","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'3a6a16cc-e49b-4854-bb43-aed98e48cf6d'›","‹0›","‹'83fe9e0e-dbb2-40ab-9f67-c4e6c97388ef'›","‹5›","‹432.98›","‹'3a6a16cc-e49b-4854-bb43-aed98e48cf6d'›","‹1›","‹'747df5ec-6e83-4ae8-b72b-5616cf113cfc'›","‹5›","‹315.46›","‹'3a6a16cc-e49b-4854-bb43-aed98e48cf6d'›","‹2›","‹'057ec09b-490e-4e22-94ca-70ca794c9619'›","‹5›","‹472.38›","‹'3a6a16cc-e49b-4854-bb43-aed98e48cf6d'›","‹3›","‹'e8d2defc-d59e-4b78-8b9c-865e46c48d04'›","‹5›","‹32.03›"],"ExecMode":"exec","NumRows":4,"Age":5.872477,"TxnCounter":122}

I220316 09:51:16.534327 4242163 9@util/log/event_log.go:32 ⋮ [n2,client=‹192.168.1.99:56360›,hostnossl,user=root] 279907 ={"Timestamp":1647424276534179566,"EventType":"query_execute","Statement":"SET application_name = $1","Tag":"SET","User":"root","ApplicationName":"‹UNNAMED›","PlaceholderValues":["‹'UNNAMED'›"],"ExecMode":"exec","Age":0.14912,"TxnCounter":123}

Much better. The rewrite parameter is apparently needed to enable INSERT batching over the wire even if the app-tier client JDBC driver is instructed to do batching.

The demo application also support PostgreSQL. Let's see if we get the same result by enabling SQL trace logging in PostgreSQL. This is done by editing the postgresql.conf file and set the log_statements property to all:

log_statement = 'all'

Re-running the test without reWriteBatchedInserts:

2022-03-16 10:03:00.102 UTC [3668] postgres@spring_boot_batch LOG: execute <unnamed>: insert into orders (customer_id, date_placed, date_updated, deliv_address1, deliv_address2, deliv_city, deliv_country, deliv_postcode, status, total_price, id) values ($1, $2,

$3, $4, $5, $6, $7, $8, $9, $10, $11)

2022-03-16 10:03:00.102 UTC [3668] postgres@spring_boot_batch DETAIL: parameters: $1 = '04d7e9fa-1a5a-4904-8e21-c7ca63c60451', $2 = '2022-03-16 11:02:05.547+01', $3 = '2022-03-16 11:02:05.547+01', $4 = 'w6alOP83VqxS4sTAyiVY', $5 = 'N0NKaoAZ9Ld_5BSDKGHh', $6 = 'S

ydney', $7 = 'Switzerland', $8 = '22048', $9 = 'placed', $10 = '5250.10', $11 = '9090a71e-896c-49b0-aa48-601f8359f930'

2022-03-16 10:03:00.102 UTC [3668] postgres@spring_boot_batch LOG: execute S_10: insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values ($1, $2, $3, $4, $5)

2022-03-16 10:03:00.102 UTC [3668] postgres@spring_boot_batch DETAIL: parameters: $1 = '9090a71e-896c-49b0-aa48-601f8359f930', $2 = '0', $3 = 'a4095ed3-7d24-4364-b856-ea99736bdbd8', $4 = '5', $5 = '490.91'

2022-03-16 10:03:00.102 UTC [3668] postgres@spring_boot_batch LOG: execute S_10: insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values ($1, $2, $3, $4, $5)

2022-03-16 10:03:00.102 UTC [3668] postgres@spring_boot_batch DETAIL: parameters: $1 = '9090a71e-896c-49b0-aa48-601f8359f930', $2 = '1', $3 = 'fa4cf466-2f4d-4724-be7d-fccb28793d0f', $4 = '5', $5 = '438.33'

2022-03-16 10:03:00.103 UTC [3668] postgres@spring_boot_batch LOG: execute S_10: insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values ($1, $2, $3, $4, $5)

2022-03-16 10:03:00.103 UTC [3668] postgres@spring_boot_batch DETAIL: parameters: $1 = '9090a71e-896c-49b0-aa48-601f8359f930', $2 = '2', $3 = '423de68c-62c0-45f4-b69f-3c85b661fa68', $4 = '5', $5 = '20.00'

2022-03-16 10:03:00.103 UTC [3668] postgres@spring_boot_batch LOG: execute S_10: insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values ($1, $2, $3, $4, $5)

2022-03-16 10:03:00.103 UTC [3668] postgres@spring_boot_batch DETAIL: parameters: $1 = '9090a71e-896c-49b0-aa48-601f8359f930', $2 = '3', $3 = '51855f01-d3d2-4b28-a862-670840ee70f3', $4 = '5', $5 = '100.78'

Ok, no batching of INSERTs. Now lets check the log when reWriteBatchedInserts is set to true:

2022-03-16 10:05:26.902 UTC [3916] postgres@spring_boot_batch LOG: execute <unnamed>: insert into orders (customer_id, date_placed, date_updated, deliv_address1, deliv_address2, deliv_city, deliv_country, deliv_postcode, status, total_price, id) values ($1, $2, $3, $4, $5, $6, $7, $8, $9, $10, $11)

2022-03-16 10:05:26.902 UTC [3916] postgres@spring_boot_batch DETAIL: parameters: $1 = '63886ad4-1c55-46b6-9fc6-f841418ddc2e', $2 = '2022-03-16 11:04:32.35+01', $3 = '2022-03-16 11:04:32.35+01', $4 = 'MShABnvaFI85CUbA6y4f', $5 = '0gfm57EIIWf_GgjzKlm0', $6 = 'Chicago', $7 = 'Puerto Rico', $8 = '95711', $9 = 'placed', $10 = '2553.75', $11 = '33bc2441-b11f-4dea-b1ed-adcbf0ad3ddc'

2022-03-16 10:05:26.903 UTC [3916] postgres@spring_boot_batch LOG: execute <unnamed>: insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values ($1, $2, $3, $4, $5),($6, $7, $8, $9, $10),($11, $12, $13, $14, $15),($16, $17, $18, $19, $20)

2022-03-16 10:05:26.903 UTC [3916] postgres@spring_boot_batch DETAIL: parameters: $1 = '33bc2441-b11f-4dea-b1ed-adcbf0ad3ddc', $2 = '0', $3 = 'e2559f61-0dbd-43ea-8f1e-cda004ffc410', $4 = '5', $5 = '20.00', $6 = '33bc2441-b11f-4dea-b1ed-adcbf0ad3ddc', $7 = '1', $8 = '4c8f37b2-8fe2-46ec-8fd2-fe725da0785f', $9 = '5', $10 = '102.45', $11 = '33bc2441-b11f-4dea-b1ed-adcbf0ad3ddc', $12 = '2', $13 = '452bb019-98c0-43c3-980f-273c2ddd8f88', $14 = '5', $15 = '237.47', $16 = '33bc2441-b11f-4dea-b1ed-adcbf0ad3ddc', $17 = '3', $18 = 'b4b82a2e-0b95-4c79-8774-955184839dcb', $19 = '5', $20 = '150.83'

2022-03-16 10:05:26.904 UTC [3916] postgres@spring_boot_batch LOG: execute S_1: COMMIT

Bingo, in other words the same results as with CockroachDB, which by the way implements the PGWire protocol to leverage the existing client library ecosystem.

Order Updates

We're not quite done yet. This was for the INSERT's only and we also have UPDATE's to deal with. Hibernate provides a few additional properties that both sort/order the INSERTs and UPDATEs and adds batching of the UPDATEs.

setProperty(Environment.ORDER_INSERTS, Boolean.TRUE.toString());

setProperty(Environment.ORDER_UPDATES, Boolean.TRUE.toString());

setProperty(Environment.BATCH_VERSIONED_DATA, Boolean.TRUE.toString());

Now let's do a final run and see how the statements are ordered and batched:

11:28:29.796 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":15, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["select product0_.id as id1_3_0_, product0_.inventory as inventor2_3_0_, product0_.name as name3_3_0_, product0_.price as price4_3_0_, product0_.sku as sku5_3_0_ from products product0_ where product0_.id=?"], "params":[["4f622f16-4b9a-4384-ac92-1699676c0843"]]}

11:28:29.796 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["select product0_.id as id1_3_0_, product0_.inventory as inventor2_3_0_, product0_.name as name3_3_0_, product0_.price as price4_3_0_, product0_.sku as sku5_3_0_ from products product0_ where product0_.id=?"], "params":[["325648d0-c7e7-4376-83dd-e41f6095b687"]]}

11:28:29.796 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["select product0_.id as id1_3_0_, product0_.inventory as inventor2_3_0_, product0_.name as name3_3_0_, product0_.price as price4_3_0_, product0_.sku as sku5_3_0_ from products product0_ where product0_.id=?"], "params":[["844bceb8-e3e6-4c03-8791-7939298ba792"]]}

11:28:29.796 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":false, "querySize":1, "batchSize":0, "query":["select product0_.id as id1_3_0_, product0_.inventory as inventor2_3_0_, product0_.name as name3_3_0_, product0_.price as price4_3_0_, product0_.sku as sku5_3_0_ from products product0_ where product0_.id=?"], "params":[["efab0b27-e2c1-4b7c-9b6e-63bbead34c3d"]]}

11:28:29.812 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":0, "success":true, "type":"Prepared", "batch":true, "querySize":1, "batchSize":1, "query":["insert into orders (customer_id, date_placed, date_updated, deliv_address1, deliv_address2, deliv_city, deliv_country, deliv_postcode, status, total_price, id) values (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)"], "params":[["48727a38-081c-4d49-8bc4-122310fa5da3","2022-03-16 11:28:29.796","2022-03-16 11:28:29.796","KVF7xkau82vAZU9gHE-o","OGk7LOlz23kSNYTREFYs","Stockholm","Philippines","25120","confirmed","4876.75","bffbdbd7-b0a8-48a1-ba15-d5b0abc35977"]]}

11:28:29.827 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":15, "success":true, "type":"Prepared", "batch":true, "querySize":1, "batchSize":4, "query":["update products set inventory=?, name=?, price=?, sku=? where id=?"], "params":[["501","CockroachDB Unleashed - First Edition","62.78","CGSzNslgmcHATUE0","844bceb8-e3e6-4c03-8791-7939298ba792"],["620","CockroachDB Unleashed - First Edition","410.13","N1nztuPzc0irU4Td","efab0b27-e2c1-4b7c-9b6e-63bbead34c3d"],["1245","CockroachDB Unleashed - First Edition","467.97","Z6rtxWJhoxLKbCi3","325648d0-c7e7-4376-83dd-e41f6095b687"],["987","CockroachDB Unleashed - First Edition","34.47","YNY0eZmIpLx1Z7r-","4f622f16-4b9a-4384-ac92-1699676c0843"]]}

11:28:29.843 TRACE [io.roach.SQL_TRACE] {"name":"", "connection":7, "time":16, "success":true, "type":"Prepared", "batch":true, "querySize":1, "batchSize":4, "query":["insert into order_items (order_id, item_pos, product_id, quantity, unit_price) values (?, ?, ?, ?, ?)"], "params":[["bffbdbd7-b0a8-48a1-ba15-d5b0abc35977","0","4f622f16-4b9a-4384-ac92-1699676c0843","5","34.47"],["bffbdbd7-b0a8-48a1-ba15-d5b0abc35977","1","325648d0-c7e7-4376-83dd-e41f6095b687","5","467.97"],["bffbdbd7-b0a8-48a1-ba15-d5b0abc35977","2","844bceb8-e3e6-4c03-8791-7939298ba792","5","62.78"],["bffbdbd7-b0a8-48a1-ba15-d5b0abc35977","3","efab0b27-e2c1-4b7c-9b6e-63bbead34c3d","5","410.13"]]}

Configuration Summary

Set the following Hibernate properties:

setProperty(Environment.STATEMENT_BATCH_SIZE, 24);

setProperty(Environment.ORDER_INSERTS, Boolean.TRUE.toString());

setProperty(Environment.ORDER_UPDATES, Boolean.TRUE.toString());

setProperty(Environment.BATCH_VERSIONED_DATA, Boolean.TRUE.toString());

Set the reWriteBatchedInserts property in the datasource or JDBC URL to true:

ds.addDataSourceProperty("reWriteBatchedInserts", true);

In terms of optimal batch size it always depends on the use case. The number of columns, size of the statement with bound parameters etc. Less is more, so one rule of thumb is to start with a lower number and increase with 16 or 32 increments and measure.

Benchmark

This benchmark illustrates that the difference in throughput is about 3-4x for this workload:

@org.junit.jupiter.api.Order(1)

@ParameterizedTest

@ValueSource(ints = {500, 1000, 1500, 2000, 2500, 3000, 3500, 4000})

public void whenCreatingBulkOrders_thenAlsoUpdateInventory(int numOrders) {

final List<Order> orders = new ArrayList<>();

testDoubles.newOrders(numOrders, 4, orders::add);

Timer.timeExecution("placeOrderAndUpdateInventory(" + numOrders + ")",

() -> orderService.placeOrderAndUpdateInventory(orders));

}

Results with batch size 24 and flush intervals of 150 orders:

| Order # | No Batching (sec) | Batching (sec) |

| 500 | 9.590 | 2.465 |

| 1000 | 10.511 | 2.499 |

| 1500 | 14.177 | 3.587 |

| 2000 | 19.173 | 5.600 |

| 2500 | 23.150 | 6.390 |

| 3000 | 28.600 | 7.690 |

| 3500 | 32.664 | 8.540 |

| 4000 | 37.853 | 10.926 |

Conclusions

Enabling batch statements for write-heavy workloads using JPA and Hibernate can make a significant performance difference. There are a few pitfalls to be aware of in terms of enabling batching but once done, it can yield a big performance speed-up with almost zero effort.