Photo by Edho Pratama on Unsplash

Defining Quality Attributes

Quality attributes or non-functional requirements in software systems

Introduction

Quality attributes are synonymous with non-functional requirements, as in the properties and characteristics of a software system that is not directly related to some functional aspect. Quality attributes are what ultimately define a system's runtime and evolutionary characteristics. These attributes indicate the software architecture style of the system and how different implementation mechanisms support these qualities, including the database.

In this article, we'll take a look at some of these attributes and more specifically how they can be defined, quantified and addressed when using the unique capabilities of CockroachDB.

Commonly used quality attributes for software systems (there's a lot more):

Scalability - A system’s ability to scale with increasing load and business complexity.

Reliability - A measure of a system's ability to detect and recover from failures and deliver correct, consistent and reliable results.

Performance - The unit of time it takes to execute an operation, usually measured in response time, transaction time and throughput (work per time unit).

Availability - A measure of the ability of a system to function in a state of serious service or infrastructure degradation.

Evolvability - A system’s ability to make changes with low cost and small client/user impact.

Maintainability - A system’s ability to be diagnosed and repaired after an error occurs.

Interoperability - How the system interacts with other subsystems or foreign services.

Visibility - A system’s support for debugging and real-time monitoring.

Security - A system’s ability to support security controls including access controls, encryption, data isolation, secure information processing and auditing for compliance.

Functional attributes in a software system are useless without quality, and non-functional attributes are useless without relevant purpose and meaning for a business. It's a task for architects and developers to map the problem domain against a solution domain and deliver solutions that meet both functional and non-functional requirements. The problem domain describes the what & why and the solution describes the how.

The re-usable artifacts are typically software design guidelines and principles that both guide the development of new software components as well as help delay software to deteriorate over time due to change. Change is a natural given for any software component and that's where the real cost sits in the lifecycle of software systems. Not so much in the initial development efforts. The architectural decisions made early on in a product's life cycle to support things like evolvability, maintainability and low cost of change are very important for the total cost of ownership.

There's always a balance between the amount of time invested in quality attributes against getting a new product or feature out on the market. Things need to be prioritized like anything else and the best way is to ask the business stakeholders what is most important to deliver.

Qualifying Quality Attributes

Definiting clear, measurable and quantifiable requirements is an art form. Both business-oriented and of technical nature. To get good at it you need to ask questions and a lot of them.

How can we define, measure and communicate the importance of abstract things like evolvability? Let's give it a try by listing a few questions for each listed quality attribute. Because the database is a critical infrastructure component for any software system, let's also see how each quality attribute can be addressed from a database point of view. Not just any database but CockroachDB.

Scalability

A system’s ability to scale with increasing load and business complexity.

Scalability describes the ability of a system to cope with increased load, most often measured in latency percentiles and throughput. When the load increases on a system, it's relevant to observe much more resources are needed to maintain the same level of performance. When a business grows in terms of increased customer demands, new markets or acquisitions, scalability also describes the ability to adapt systems to that new reality without having to undergo major refactoring or redesign efforts.

Questions:

What are the data volumes and what’s the expected growth over time?

What is the impact of traffic, data or customer base growing by 10x?

What level of scalability is relevant, local, regional or global?

How important is it to deliver a consistent customer experience globally?

What does the traffic load look like for steady state, peak, extended peak and stress?

Does the system need to auto-adjust to increased/decreased spike demands?

Is data archiving to offline systems needed?

Solutions:

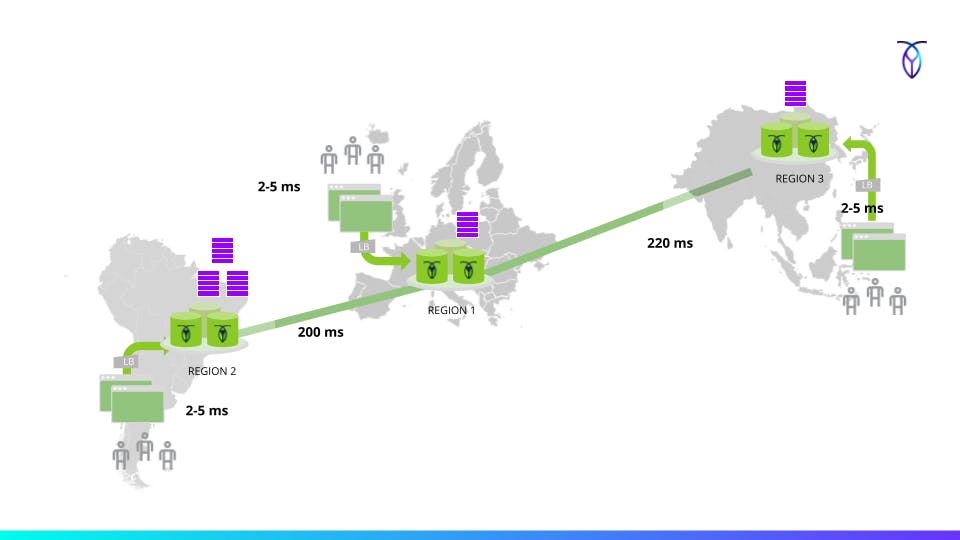

CockroachDB is a geo-distributed SQL database designed to scale horizontally by adding more nodes to a cluster, increasing compute and IO capacity. Given the transactional properties and consistency guarantees, it also enables crafting multi-active systems, where its relevant to distinguish between response times and service times.

Service time is the time it takes to process a synchronous request entering a service's boundary and preparing a response. Response time is service time plus the time it takes to transport traffic over the network including queuing delays. For a cluster spanning for example SA-EU-AP, we can drastically reduce response times by servicing requests in the proximity of where it's stored.

Multi-active systems add the ability to control both service and response time through physical network topologies, data locality and replication policies. Depending on how nodes and replicas are arranged, both latency and fault tolerance or survival goals can also be controlled.

It provides the equivalence of a content-delivery network (CDN) for transactional data, logically spanning the entire globe. No matter which (edge) node you interact with, it will provide a consistent and accurate result.

Reliability

A measure of the system's ability to detect and recover from failures and deliver correct, consistent and reliable results.

Reliability describes the ability of a system to operate correctly (do the right thing) at the desired level of performance, both under heavy concurrent workloads and in the event of infrastructure failures like partial and full zone or region failures. It’s more difficult to measure than scalability but can be defined in different ways.

For instance:

Not loose or corrupt data because of infrastructure failures (no partial commits)

Not loose or corrupt data because of concurrency anomalies (ex: lost updates, phantom reads, read/write skew)

Not provide stale data where authoritative data is expected

Prevent diverging histories of state and throwing away committed writes when healing from network partitions

Not breach correctness rules or invariants (ex: negative balance in accounting)

Tolerate human mistakes and errors

Questions:

What is the anatomy of a typical business transaction?

How does it get triggered?

What is the average duration?

How much information needs to be scanned vs returned?

What are the expected success and failure outcomes?

How are key business rule invariants to be protected?

Is reading information always needed to be authoritative or potentially stale?

How are business transactions spanning multiple services handled?

How are transient transaction errors handled?

How are timeouts/indeterminate outcomes handled?

How is service idempotency implemented?

Solutions:

One feature of multi-active systems is the ability to operate simultaneously from multiple geographies with sustained throughput and transactional integrity, both during steady state and during disruptions or even disasters in other regions. In a 3-region CockroachDB deployment, one entire region can have an outage without affecting forward progress in the other two.

Performance

The unit of time it takes to execute an operation, usually measured in response time or transaction time and throughput (work per time unit).

Questions:

How is customer experience affected by high response times and low throughput?

How many concurrent active sessions/users will connect to the system?

Are there specific performance and throughput targets (service level indicators) on a per-use case basis?

What is the ratio between reads and writes?

Can reads be served as potentially stale or always authoritative?

Will there be a caching tier to scale out reads, in that case, how is the cache invalidated and kept in sync?

Defining performance requirements should ideally be context or journey specific. Most larger systems are composed of different customer journeys such as registration, login, deposit, withdrawal, pay and so on. Not all journeys have the same NFRs and may touch different services, hence it makes sense to define the performance goals based on that rather than at individual service level.

For example, for journey X:

Raw data size: 4TB

Active connections: 400 to 500

Actual users: 7M

Active users: 500k

Reads must be authoritative

Sustained throughput under 60min, qualified with:

- 5,000 business transactions per sec, equivalent to 15K QPS, at P99 < 120ms, read ratio 75%

Peak throughput under 30min, qualified with:

- 7000 business transactions per sec, equivalent to 21K QPS, at P95 < 150ms, read ratio 65%

Extended peak throughput under 15min, qualified with:

- 10,000 business transactions per sec, equivalent to 30K QPS, at P95 < 300ms, read ratio 65%

Solutions:

CockroachDB delivers predictable response times and throughput for different workload scales. When optimizing performance characteristics, it is typically a matter of finding opportunities in:

Application workload patterns

Schema design

Cluster hardware capacity and utilization

Replica and leaseholder placement

Most opportunities are outlined in SQL performance best practices. A workload should be evenly distributed across all machines of a cluster (no hotspots) which happens automatically given a few schema design and load balancing considerations. By using different multi-region capabilities, the coordination between nodes over the network can also be minimized, drastically improving both read and write performance.

Let's wrap with the other quality attributes by just highlighting a few relevant questions.

Availability

A measure of the ability of a system to function in a state of serious service or infrastructure degradation.

Questions:

What level of redundancy will the system have (any accepted SPOFs)?

What type of infrastructure failures must the system survive (cloud, region, zone, rack or server)?

What’s the business impact on degraded or denied forward progress?

Will the system continue to function on partial infrastructure failure?

Are there any specific RTO and RPO metrics?

What are the requirements for backup and restore (MTTR)?

Evolvability

A system’s ability to make changes with low cost and small client impact.

Questions:

What's the structure and process around the development and deployment pipeline?

Are downtime windows allowed for production deployments?

Is a pre-production deployment environment needed?

What are the key factors that impact time-to-value in new business initiatives/improvements?

How does changing/adding functionality impact existing functionality?

Maintainability

A system’s ability to be repaired after an error occurs.

Questions:

What design principles are applied to reduce maintenance efforts?

How much QA/OPS effort is needed to verify and deploy the system?

Interoperability

How the system interacts with other subsystems or foreign services.

Questions:

How does data flow into the system and what is the output?

Is the system classified as online, nearline or offline?

What are the major infrastructure components involved (database, message broker)?

Is the system classified as a system of record or a system of access?

Is the interaction model a typical request/response based model or an event-driven, async model?

Visibility

A system’s support for debugging and real-time monitoring.

Questions:

How is the system monitored and acting on alerts?

How can problems quickly be identified and corrected?

Security

A system’s ability to support security controls including access controls, encryption, data isolation, secure information processing and auditing for compliance.

Questions:

Will the system run in a PCI or equivalent regulated environment?

Will the system handle PII data?

What security mechanisms does the system require on ingress and egress channels, or data protection?

Conclusion

This article discusses non-functional requirements, also known as quality attributes, which are properties and characteristics of a software system that is not directly related to its functional aspects. It looks at how these requirements can be defined, quantified, and addressed when using the capabilities of CockroachDB.