Introduction

In a previous article, we looked at using super regions for data domiciling in CockroachDB. To recap, data domiciling is the art of controlling the placement of subsets of data in specific regions or locations. This is often required by privacy regulations like GDPR and The Wire Act in the US.

In this article, we'll look at a new concept in CockroachDB (since v22.2) called Secondary Regions. Secondary regions allow you to define a database region that will be used for failover in the event your primary region goes down. Previously, when the primary region failed, the leaseholders would be transferred to another region at random. Secondary regions now add control over that "fail-over".

In the context of super regions, if the primary region is part of a super region, the secondary region must also be a region within the primary super region.

For a primer in data domiciling and database survival in CockroachDB, see:

Let's go through a demo to see how secondary regions work with super regions.

Demo

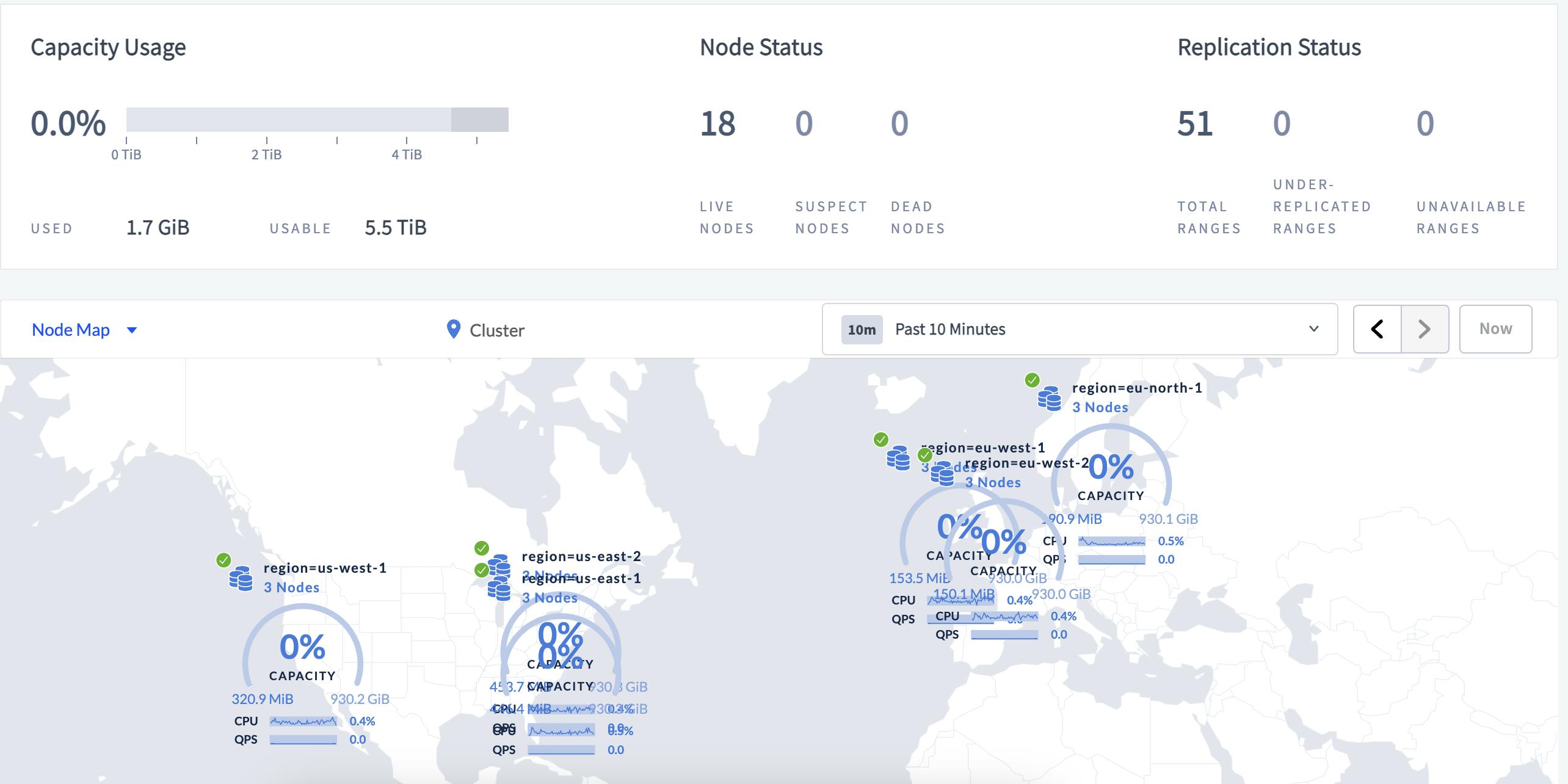

Similar to the super region demo, we will deploy a global cluster of 18 nodes (on different ports on a single machine) stretching the EU to the west and east coast of the US.

When properly configured, the DB Console should look like this:

Cluster Setup

The following script will start 18 nodes on a local machine.

#!/bin/bash

portbase=26258

httpportbase=8081

host=localhost

LOCALITY_ZONE=(

'region=eu-north-1,zone=eu-north-1a'

'region=eu-north-1,zone=eu-north-1b'

'region=eu-north-1,zone=eu-north-1c'

'region=eu-west-1,zone=eu-west-1a'

'region=eu-west-1,zone=eu-west-1b'

'region=eu-west-1,zone=eu-west-1c'

'region=eu-west-2,zone=eu-west-2a'

'region=eu-west-2,zone=eu-west-2b'

'region=eu-west-2,zone=eu-west-2c'

'region=us-east-1,zone=us-east-1a'

'region=us-east-1,zone=us-east-1b'

'region=us-east-1,zone=us-east-1c'

'region=us-east-2,zone=us-east-2a'

'region=us-east-2,zone=us-east-2b'

'region=us-east-2,zone=us-east-2c'

'region=us-west-1,zone=us-west-1a'

'region=us-west-1,zone=us-west-1b'

'region=us-west-1,zone=us-west-1c'

)

node=0;

for zone in "${LOCALITY_ZONE[@]}"

do

let node=($node+1)

let offset=${node}-1

let port=${portbase}+$offset

let httpport=${httpportbase}+$offset

let port1=${portbase}

let port2=${portbase}+1

let port3=${portbase}+2

join=${host}:${port1},${host}:${port2},${host}:${port3}

mempool="128MiB"

cockroach start \

--locality=${zone} \

--port=${port} \

--http-port=${httpport} \

--advertise-addr=${host}:${port} \

--join=${join} \

--insecure \

--store=datafiles/n${node} \

--cache=${mempool} \

--max-sql-memory=${mempool} \

--background

done

cockroach init --insecure --host=${host}:${portbase}

Check that the cluster is running by browsing to http://localhost:8081/.

Next, we'll add the regions and configure the database for region-level survival.

cockroach sql --insecure --host=localhost:26258

Note: For the next steps, you will need an enterprise trial license key.

For the node map to show all regions, add these localities:

DELETE FROM system.locations WHERE 1 = 1;

INSERT into system.locations

VALUES

('region', 'eu-north-1', 59.0, 18.0),

('region', 'us-east-1', 37.478397, -76.453077),

('region', 'us-east-2', 40.417287, -76.453077),

('region', 'us-east-3', 25.457287, -80.453077),

('region', 'us-west-1', 38.837522, -120.895824),

('region', 'us-west-2', 43.804133, -120.554201),

('region', 'ca-central-1', 56.130366, -106.346771),

('region', 'eu-central-1', 50.110922, 8.682127),

('region', 'eu-west-1', 53.142367, -7.692054),

('region', 'eu-west-2', 51.507351, -0.127758),

('region', 'eu-west-3', 48.856614, 2.352222),

('region', 'ap-northeast-1', 35.689487, 139.691706),

('region', 'ap-northeast-2', 37.566535, 126.977969),

('region', 'ap-northeast-3', 34.693738, 135.502165),

('region', 'ap-southeast-1', 1.352083, 103.819836),

('region', 'ap-southeast-2', -33.86882, 151.209296),

('region', 'ap-south-1', 19.075984, 72.877656),

('region', 'sa-east-1', -23.55052, -46.633309),

('region', 'eastasia', 22.267, 114.188),

('region', 'southeastasia', 1.283, 103.833),

('region', 'centralus', 41.5908, -93.6208),

('region', 'eastus', 37.3719, -79.8164),

('region', 'eastus2', 36.6681, -78.3889),

('region', 'westus', 37.783, -122.417),

('region', 'northcentralus', 41.8819, -87.6278),

('region', 'southcentralus', 29.4167, -98.5),

('region', 'northeurope', 53.3478, -6.2597),

('region', 'westeurope', 52.3667, 4.9),

('region', 'japanwest', 34.6939, 135.5022),

('region', 'japaneast', 35.68, 139.77),

('region', 'brazilsouth', -23.55, -46.633),

('region', 'australiaeast', -33.86, 151.2094),

('region', 'australiasoutheast', -37.8136, 144.9631),

('region', 'southindia', 12.9822, 80.1636),

('region', 'centralindia', 18.5822, 73.9197),

('region', 'westindia', 19.088, 72.868),

('region', 'canadacentral', 43.653, -79.383),

('region', 'canadaeast', 46.817, -71.217),

('region', 'uksouth', 50.941, -0.799),

('region', 'ukwest', 53.427, -3.084),

('region', 'westcentralus', 40.890, -110.234),

('region', 'westus2', 47.233, -119.852),

('region', 'koreacentral', 37.5665, 126.9780),

('region', 'koreasouth', 35.1796, 129.0756),

('region', 'francecentral', 46.3772, 2.3730),

('region', 'francesouth', 43.8345, 2.1972),

('region', 'us-east1', 33.836082, -81.163727),

('region', 'us-east4', 37.478397, -76.453077),

('region', 'us-central1', 42.032974, -93.581543),

('region', 'us-west1', 43.804133, -120.554201),

('region', 'northamerica-northeast1', 56.130366, -106.346771),

('region', 'europe-west1', 50.44816, 3.81886),

('region', 'europe-west2', 51.507351, -0.127758),

('region', 'europe-west3', 50.110922, 8.682127),

('region', 'europe-west4', 53.4386, 6.8355),

('region', 'europe-west6', 47.3769, 8.5417),

('region', 'asia-east1', 24.0717, 120.5624),

('region', 'asia-east2', 24.0717, 120.5624),

('region', 'asia-northeast1', 35.689487, 139.691706),

('region', 'asia-southeast1', 1.352083, 103.819836),

('region', 'australia-southeast1', -33.86882, 151.209296),

('region', 'asia-south1', 19.075984, 72.877656),

('region', 'southamerica-east1', -23.55052, -46.633309),

('region', 'gcp-europe-west4', 53.4386, 6.8355),

('region', 'gcp-us-west2', 43.804133, -120.554201),

('region', 'gcp-australia-southeast1', -33.86882, 151.209296),

('region', 'asia-southeast-1', 1.290270, 103.851959),

('region', 'asia-southeast-2', -6.173292, 106.841036),

('region', 'asia-southeast-3', 3.140853, 101.693207),

('region', 'au-nsw', -31.86882, 152.209296),

('region', 'au-vic', -37.5, 144.5),

('region', 'sa', -23.55052, -46.633309);

Now, let's configure the regions and enable region survival:

create database test;

use test;

-- Add the 6 regions

alter database test primary region "eu-north-1";

alter database test add region "eu-west-1";

alter database test add region "eu-west-2";

alter database test add region "us-east-2";

alter database test add region "us-east-1";

alter database test add region "us-west-1";

show regions;

-- Add the super regions

SET enable_super_regions = 'on';

ALTER DATABASE test ADD SUPER REGION eu VALUES "eu-north-1","eu-west-1","eu-west-2";

ALTER DATABASE test ADD SUPER REGION us VALUES "us-west-1","us-east-2","us-east-1";

-- Enable region survival

ALTER DATABASE test SURVIVE REGION FAILURE;

Next, let's verify that we have two super regions:

SHOW SUPER REGIONS FROM DATABASE test;

Add Test Data

The main schema parts are now done so let's add two tables and some sample data. The first table postal_codes is a global table and the second table users is using regional-by-row locality.

-- Add a GLOBAL table

create table postal_codes

(

id int primary key,

code string

);

ALTER TABLE postal_codes SET LOCALITY GLOBAL;

-- Insert some data

insert into postal_codes (id, code)

select unique_rowid() :: int,

md5(random()::text)

from generate_series(1, 100);

-- Add a regional-by-row table

CREATE TABLE users

(

id INT NOT NULL,

name STRING NULL,

postal_code STRING NULL,

PRIMARY KEY (id ASC)

);

-- Make it RBR

ALTER TABLE users SET LOCALITY REGIONAL BY ROW;

insert into users (id,name,postal_code,crdb_region)

select no,

gen_random_uuid()::string,

'123 45',

'eu-north-1'

from generate_series(1, 10) no;

insert into users (id,name,postal_code,crdb_region)

select no,

gen_random_uuid()::string,

'123 45',

'eu-west-1'

from generate_series(11, 20) no;

insert into users (id,name,postal_code,crdb_region)

select no,

gen_random_uuid()::string,

'123 45',

'eu-west-2'

from generate_series(21, 30) no;

insert into users (id,name,postal_code,crdb_region)

select no,

gen_random_uuid()::string,

'123 45',

'us-east-1'

from generate_series(31, 40) no;

insert into users (id,name,postal_code,crdb_region)

select no,

gen_random_uuid()::string,

'123 45',

'us-east-2'

from generate_series(41, 50) no;

insert into users (id,name,postal_code,crdb_region)

select no,

gen_random_uuid()::string,

'123 45',

'us-west-1'

from generate_series(51, 60) no;

select *,crdb_region from users;

select crdb_region,count(1) from users group by crdb_region;

Verify Zone Configuration

Let's look at the zone configurations before applying secondary regions. This will tell what the voter constraints and lease preferences are for each table.

select raw_config_sql from [show zone configuration for table postal_codes];

Output:

ALTER TABLE postal_codes CONFIGURE ZONE USING

range_min_bytes = 134217728,

range_max_bytes = 536870912,

gc.ttlseconds = 90000,

global_reads = true,

num_replicas = 7,

num_voters = 5,

constraints = '{+region=eu-north-1: 1, +region=eu-west-1: 1, +region=eu-west-2: 1, +region=us-east-1: 1, +region=us-east-2: 1, +region=us-west-1: 1}',

voter_constraints = '{+region=eu-north-1: 2}',

lease_preferences = '[[+region=eu-north-1]]'

(1 row)

-- For users table:

-- select raw_config_sql from [show zone configuration for table users];

ALTER DATABASE test CONFIGURE ZONE USING

range_min_bytes = 134217728,

range_max_bytes = 536870912,

gc.ttlseconds = 90000,

num_replicas = 7,

num_voters = 5,

constraints = '{+region=eu-north-1: 1, +region=eu-west-1: 1, +region=eu-west-2: 1, +region=us-east-1: 1, +region=us-east-2: 1, +region=us-west-1: 1}',

voter_constraints = '{+region=eu-north-1: 2}',

lease_preferences = '[[+region=eu-north-1]]'

(1 row)

Add Secondary Region

Now we are ready to add the secondary region. We verified that eu-north-1 is the primary region and part of super region eu, so we add eu-west-1 as the secondary region:

ALTER DATABASE test SET SECONDARY REGION "eu-west-1";

show regions;

Let's see what changed in the zone configs:

select raw_config_sql from [show zone configuration for table postal_codes];

select raw_config_sql from [show zone configuration for table users];

Output:

ALTER TABLE postal_codes CONFIGURE ZONE USING

range_min_bytes = 134217728,

range_max_bytes = 536870912,

gc.ttlseconds = 90000,

global_reads = true,

num_replicas = 8,

num_voters = 5,

constraints = '{+region=eu-north-1: 1, +region=eu-west-1: 1, +region=eu-west-2: 1, +region=us-east-1: 1, +region=us-east-2: 1, +region=us-west-1: 1}',

voter_constraints = '{+region=eu-north-1: 2, +region=eu-west-1: 2}',

lease_preferences = '[[+region=eu-north-1], [+region=eu-west-1]]'

(1 row)

-- And

ALTER TABLE users CONFIGURE ZONE USING

range_min_bytes = 134217728,

range_max_bytes = 536870912,

gc.ttlseconds = 90000,

num_replicas = 8,

num_voters = 5,

constraints = '{+region=eu-north-1: 1, +region=eu-west-1: 1, +region=eu-west-2: 1, +region=us-east-1: 1, +region=us-east-2: 1, +region=us-west-1: 1}',

voter_constraints = '{+region=eu-north-1: 2, +region=eu-west-1: 2}',

lease_preferences = '[[+region=eu-north-1], [+region=eu-west-1]]'

(1 row)

We can tell that adding a secondary region resulted in the lease preferences now having two voting replicas in the secondary region.

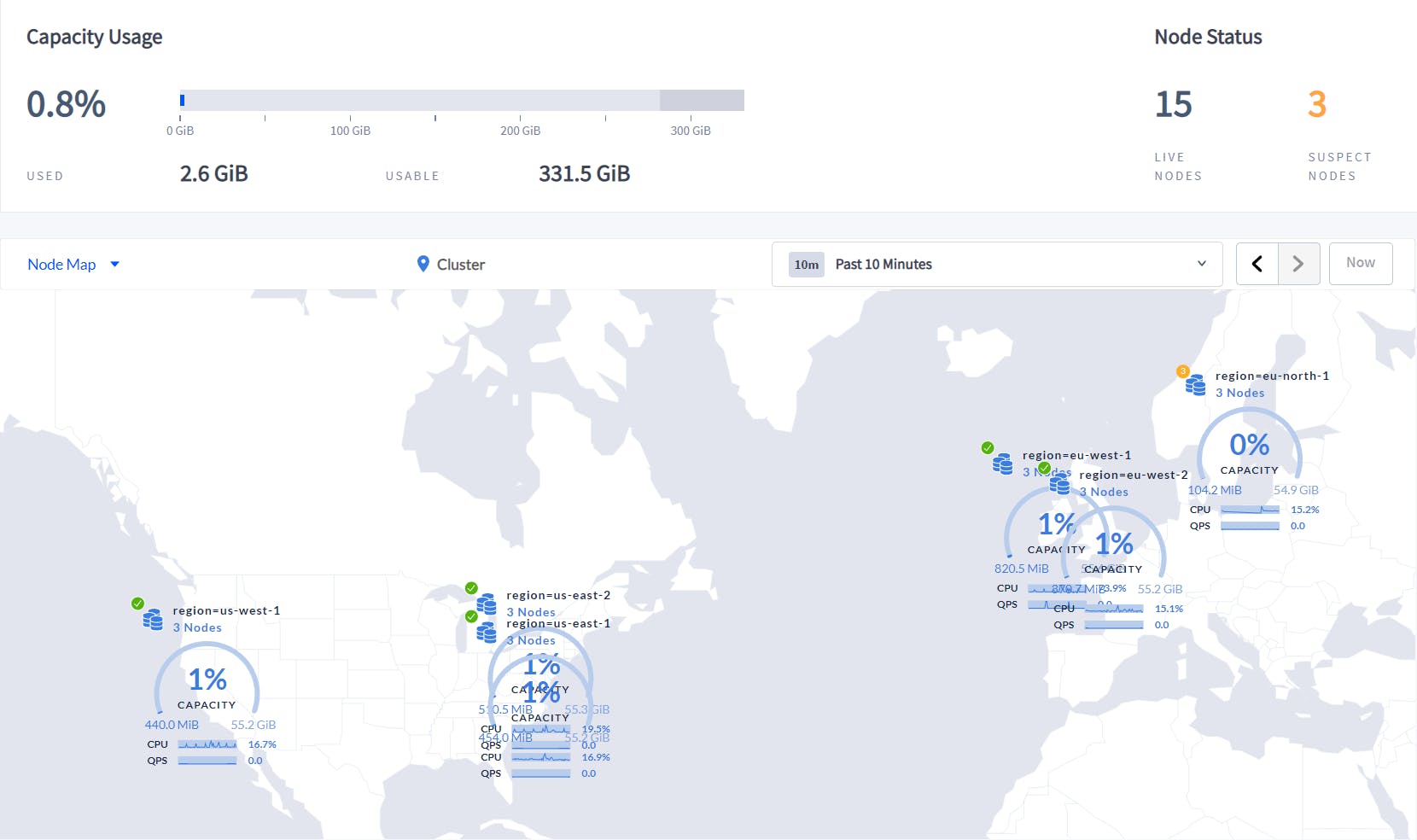

Simulate Region Failure

Let's simulate a primary region failure by simply killing the region nodes ungracefully.

ps -ef | grep cockroach | grep region=eu-north-1

-- note PIDs, then run:

kill -TERM id1

kill -TERM id2

kill -TERM id2

Check the range lease holder locality for a potential user row with id #1:

SHOW RANGE FROM TABLE users FOR ROW ('eu-north-1',1);

Notice region=eu-west-1,zone=eu-west-1b in the output:

start_key | end_key | range_id | lease_holder | lease_holder_locality | replicas | replica_localities

------------+-------------------+----------+--------------+----------------------------------+---------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

/"\x80" | /"\x80"/PrefixEnd | 55 | 3 | region=eu-west-1,zone=eu-west-1b | {1,3,8,12,17} | {"region=eu-north-1,zone=eu-north-1a","region=eu-west-1,zone=eu-west-1b","region=eu-west-1,zone=eu-west-1c","region=eu-north-1,zone=eu-north-1c","region=eu-west-2,zone=eu-west-2b"}

(1 row)

Lastly, if you restart the 3 nodes again (just re-run the original script) you will see the lease-holder reverting to the primary region (region=eu-north-1,zone=eu-north-1b):

SHOW RANGE FROM TABLE users FOR ROW ('eu-north-1',1);

start_key | end_key | range_id | lease_holder | lease_holder_locality | replicas | replica_localities

------------+-------------------+----------+--------------+------------------------------------+--------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

/"\x80" | /"\x80"/PrefixEnd | 55 | 7 | region=eu-north-1,zone=eu-north-1b | {1,3,7,8,17} | {"region=eu-north-1,zone=eu-north-1a","region=eu-west-1,zone=eu-west-1b","region=eu-north-1,zone=eu-north-1b","region=eu-west-1,zone=eu-west-1c","region=eu-west-2,zone=eu-west-2b"}

(1 row)

Conclusion

This article explains how to configure CockroachDB's Secondary Regions feature, which allows for control over failover in the event of a primary region going down. It provides a demo of how to set up a global cluster, how to configure the database for region-level survival, and how to add localities to the node map. It also provides instructions on how to add two tables and sample data, and how to verify the zone configuration before applying secondary regions.